WTF is Activation?

and what's an Aha moment?

Hey there, it’s Jacob at Retention.Blog 👋

I got tired of reading high-level strategy articles, so I started writing actionable advice I would want to read.

Every week I share practical learnings you can apply to your business.

Activation and Aha! Moments

Your activation moments are one of the most critical things to understand to drive retention.

The stage is set immediately for good retention upon a new user trying your app.

The trick is figuring out how to get them to unlock and understand the value of your app, fast.

But not too fast.

Activation and Aha! moments have become a popular framework for thinking about how to solve this problem.

So what is an Aha! Moment?

Typically, this is the moment where your user experiences the core value of your app for the first time.

They don’t have to say “Aha!” out loud, but if you can get them to do that, you’re probably on to something :)

This will, of course, be different for different products, but some examples may be:

For a workout app, a user starting their first guided workout

In Canva, it may be a user exporting their first design

For a meditation app, completing their first guided meditation

For Duolingo, completing their first lesson

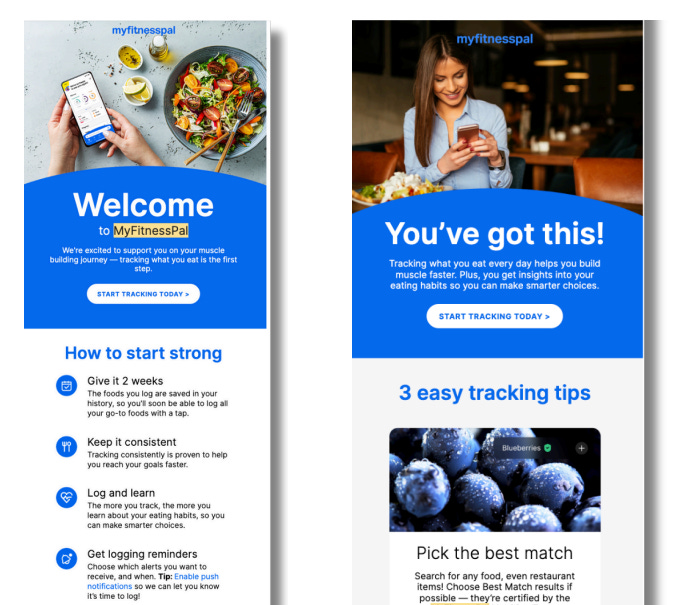

For MyFitnessPal, tracking their first meal

For Kindle, starting or downloading their first book

I think you get it.

It’s that initial moment of joy from the user when they realize that your product can start to solve their problems and help them.

There are the emotional and experiential elements, but there is also the quantitative side. Is this moment predictive of long-term retention or value? We’ll get more into that in a minute.

First, I’m going to talk about the journey to getting towards this activation or Aha moment.

Next, I’ll share some resources for how to truly do this quantitatively from my friend, Olga Berezovsky , at Data Analysis Journal.

Then, we’ll talk about how to actually get people to complete this activation moment and change behavior.

Definitions and clarifications

There are Aha! moments, activation moments, and then there is your activation metric.

Typically, that Aha! moment is when a user first uncovers the value in your app. This doesn’t necessarily mean it’s the same as your activation metric. It depends on how your company or team uses it.

Activation moments can be multiple subsequent moments that build toward your core definition of “Activation” or your activation metric.

Some teams only have one “activation” moment, but they also have setup moments to lead to activation, and habit moments that reinforce activation.

I generally think of the Aha moment as that first real moment where the user experiences your core value.

The “activation metric” is your quantitative, ideally causally proven, definition of metric that you use across dashboards and measure to define your activation rate.

For example, for a workout app, the Aha moment could be completing your first guided workout.

While the activation metric may be completing two workouts in the first 7 days.

New Price Power Podcast Episode!

Do you know Barbara Galiza? She is an all-around attribution and conversion tracking all star. She has built growth teams from 0-1, worked with Microsoft, HER, WeTransfer, and more to fix their growth marketing analytics.

What you’ll learn

The optimal 3-event conversion structure for Meta/Google (and why tracking more hurts performance)

Why speed of event delivery is one of the strongest levers for match quality & cheaper CPAs

How to incorporate value signals (trial filters, buckets, predicted value) without full LTV modeling

Why using PII (hashed email/phone) dramatically improves attribution & optimization

and more!

Listen here:

➡️ Spotify 🔗

➡️ Youtube 🔗

➡️ Apple Podcasts 🔗

ok, back to activation…

Journey Towards Activation

So how do we get started?

Before we do anything quantitative, we need to start brainstorming.

What in the world could our activation metric be?

You tell me.

Generally, you’re going to have a sense of the possible moments or events that could be your activation metric.

But going back to what we said above, start thinking about those emotional moments in your app where people start to understand your core value.

What events do those align with?

Let’s continue with our workout app example. I’m going to brainstorm some possible events:

Instructor selected

Completed onboarding

7-day workout plan picked

Completed 1 workout

Completed 2 workouts of different types

Completed a multi-day workout plan

Received personalized workout recommendations

Recorded feedback on my workout

Okay, that’s good to start.

I think you get a sense of what we’re looking for.

We want to be looking for events in our app that happen relatively early, can be completed by all new users, and are connected to our core value.

If your app is built around generating personalized recommendations, maybe “Received personalized workout” could be more viable.

If your app is built around each person getting a specific plan driven by a specific workout instructor, maybe “Instructor selected” is interesting.

My point is that even for products that may seem similar (workout apps), there are going to be nuances to your core value that influence your activation and Aha moments.

Depending on how big your team is, it can also be valuable to get multiple people involved in this brainstorming and make it a collaborative process.

Realistically, you could probably come up with all the possible activation moments on your own. But once you figure out what it is, I can guarantee that you won’t be able to make a large difference in it all on your own.

Changing retention and engagement patterns are hard problems that have to be done collaboratively. Ideally, you want the whole company or team onboard.

They need to understand what you’re doing and why. So the more buy-in you get early, the better.

Now we have a good list of possible metrics.

Can any of the events be done multiple times? If so, add completed 1, 2, and 3 times to start.

E.g. Completed 1 workout, completed 2 workouts, completed 3 workouts

There are a few ways to start narrowing these down.

From Merci Grace and Lenny’s Newsletter:

“Once you have a candidate for your activation metric, you need to test whether it works for your business. To do that, check that these things are true:

You can ship experiments. You can build good in-product tests with enough power to make them useful.

You can post wins. You are able to increase the rate of activation over months or quarters.

Increasing the rate of activation does not destroy the relationship between that activation metric and longer-term retention.

If these are true, you have a foundation to experiment with adjacent funnel levels, like investing more in demand gen or monetization.”

So thinking about that, do any of the events we identified seem like things that we wouldn’t be able to increase or influence over a period of time?

At a certain point, “Instructor Selected” will likely cap out and not be able to be increased long-term.

Possibly, we don’t want everyone to do “7-day workout plan picked”, and it’s beneficial for some users to select shorter plans.

And “Completed onboarding” probably isn’t aligned enough with our core value.

So we’ll narrow down to:

Completed workout (1, 2, and 3 times)

Completed 2 workouts of different types

Completed a multi-day workout plan

Received personalized workout recommendations

Recorded feedback on my workout (1, 2, and 3 times)

We’ve “qualitatively” narrowed down our list. Now let’s “quantitatively” narrow down.

This is much easier to do if you have a tool like Mixpanel or Amplitude compared to by hand. It’s possible with many other data analysis tools, but the main piece you need to be able to accomplish is:

Create segments of users who have completed these events

Plot those segments against user retention to see how they differ

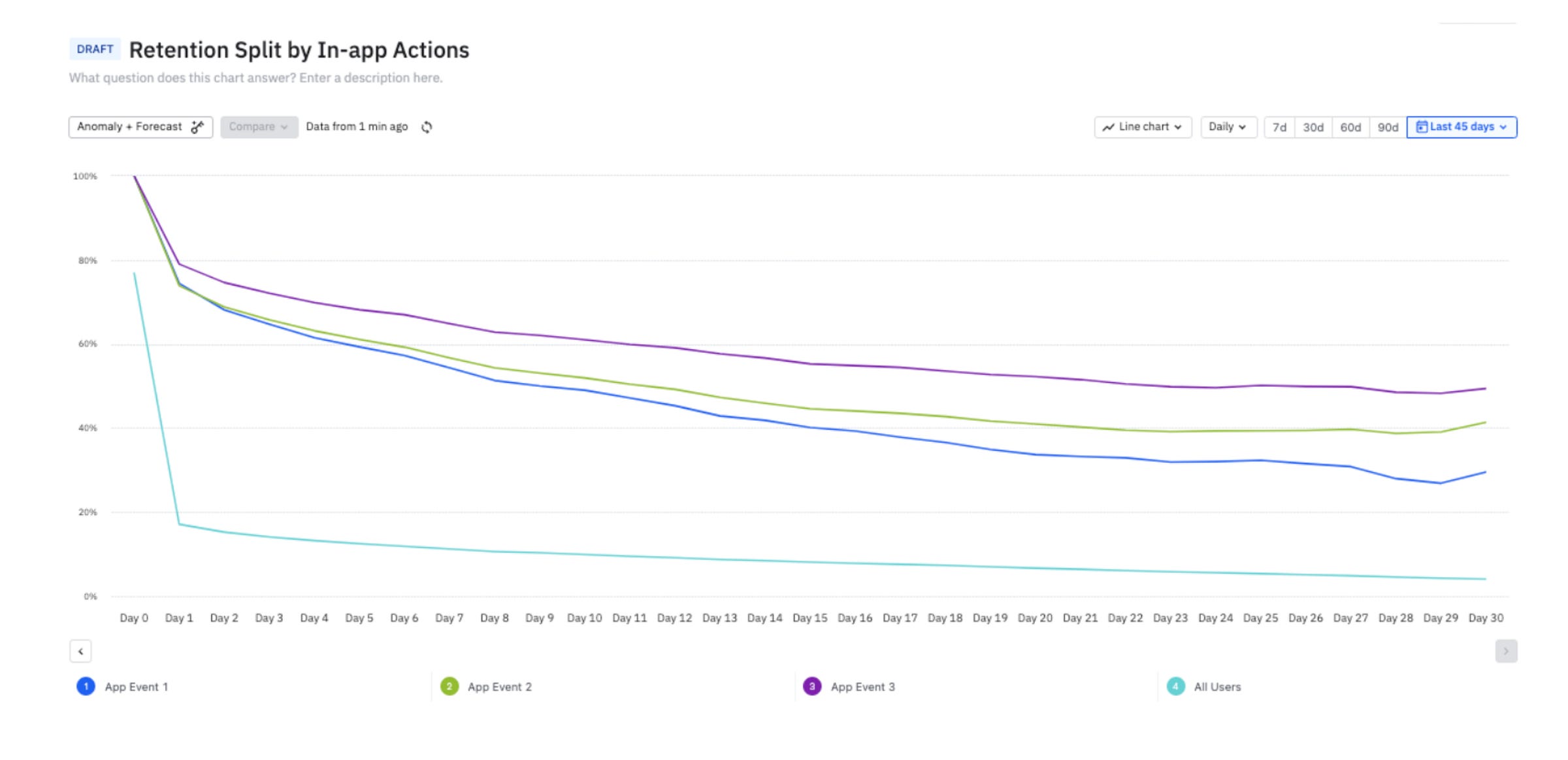

These are the types of charts we want to create:

We want to start understanding, based on these events, and the users who completed these events, what is the correlation with retention.

Visually, it’s easier to start picking out which events are front-runners.

Once we see maybe the top 3 events, we’ll want to create segments for each frequency of the event as well.

And let’s add time and frequency. E.g. Completed 1 workout in 7 days, 2 workout in 7 days, etc.

We can also do correlation analysis in Excel, but it gets complicated pretty quick. (at least for me…)

The other element of retention we want to be aware of is: are we measuring general session retention? Or do we have a higher bar of retention that equals doing a meaningful action in the app? This raises the bar a bit higher than simply opening the app, but also means you need more data.

Realistically, how quantitative we get depends on the size of our product and how much data we have.

If we’re newer or smaller, we may not have enough data to build a good regression model to prove out the connection.

It’s likely okay to stop at trying to understand correlation in Mixpanel or Amplitude, and then try to run some experiments against these metrics to see if you can influence retention.

At the end of the day, you’ll need to run experiments against the metric anyway, so don’t waste your time trying to build some complicated model when you don’t have enough data anyway for it to be accurate.

I'm sorry to interrupt you again, but we have an unreal guide on price testing on the Botsi Blog from Michal Parizek at Mojo I don't want you to miss.

➡️ Check out the post here (and send us a like 😀).

From Michal:

"Our success rate for pricing experiments was 56%, compared to only 14% for design, copy, and layout tests. That difference alone explains why we’ve prioritized pricing over everything else.

In fact, we ran nearly 40 pricing experiments in the past two years—twice as many as any other type."Where are we at now?

We have a few possible metrics

We’ve plotted them on some graphs to further validate there is a correlation

We’ve filtered out from a qualitative perspective which ones don’t make sense.

Now for the big guns

We want to run a correlation or regression analysis on the data we have to prove or verify that we’re looking at the right metrics.

The first post I want you to reference from Olga Berezovsky is:

How to do linear regression and correlation analysis

The second post is: Why Your Activation Analysis Is Wrong - And How to Fix It

Yes, this is a paid post, but if you’re truly working on finding your activation metric right now, pay for a month, pay for a year, it doesn’t really matter. The ROI for your business from this and other posts from Olga will be immeasurable.

At the end of this, we’ll have done some correlation or regression analysis, and have an Activation Metric definition that hopefully, has a strong correlation proven

Image from Lenny’s and Olga’s post above.

We’re done right?

Of course…not.

Correlation does not prove causation.

The next step is to try influencing our new found activation metric!

Let’s say that we find that “2 workouts completed in the first 7 days” is our new activation metric.

We see a strong correlation between these metric and 30-day retention.

Now we need to understand all levers and breakdown the path for user to get there.

Our activation metric is the output.

What are all the inputs towards that metric?

Onboarding completion rate

Account creation rate

Workout preferences selected

Push notification opt-in rate

Early re-engagement notification tap rate

1st workout completed (gotta do one before you can do two!)

User acquisition channel source

So now we go to work on experiment ideas for how to improve all of these inputs.

Use RICE prioritization (Reach, Impact, Confidence, Effort)

And figure out what tests to start with.

The largest predictor of users getting to complete 2 workouts will be, “Did they complete 1 workout?”

This sounds obvious, but it illustrates how we want to work backwards to figure out the different steps to optimize.

It’s obvious 1 workout leads to 2, but what leads to 1 workout? Slightly less obvious.

Then, before you start prioritizing, remember to break these inputs and funnels down by different segments.

For our workout app, there are going to be some key early questions we ask our users (or should ask) to understand differing performance.

For example, the user who says, “I work out at home” vs “I work out at a gym” will likely have different results.

The user who works out at home can start anytime they’re at home. The other person has to remember next time they go to the gym.

Maybe you want to ask both users when they typically work out, or when they plan to work out next and send them a reminder at that time?

Or you can ask, “Are you ready to work out now?”

This is just one example of how the intervention you should test will not always be the same across all segments.

You want to be careful of getting too many unique interventions for many segments, but if you have a few big swaths of users with different use cases, they may need different experiences.

Here are a few different ideas for how to improve early activation rates:

Create a Custom New User Experience: Don’t just drop users onto a generic homepage after they’ve completed the initial sign-up or paywall. Instead, design a dedicated flow that guides them directly towards experiencing the core value of your app.

See that here:

Integrate Guidance Seamlessly into the UI: Avoid relying heavily on intrusive tooltips. Instead, build the guidance directly into the user interface. If a user is clearly struggling or tapping around confused, then a tooltip can be a helpful intervention, but the primary design should make the next steps obvious.

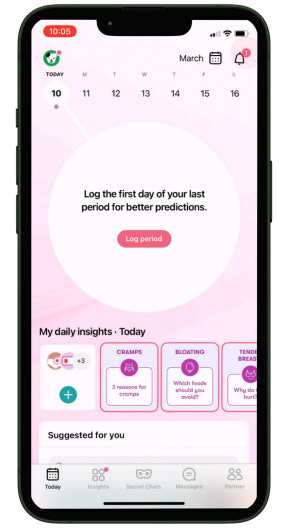

Flo, for instance, integrates guidance into its UI to ensure new users know exactly what to do

.

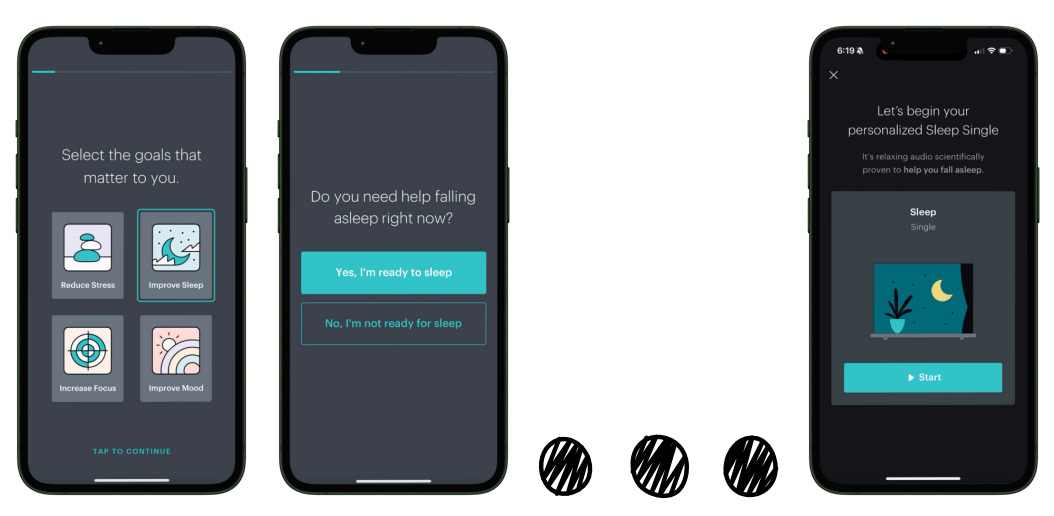

Personalize Early Experiences: Use the data you collect during onboarding to tailor the experience. If a user indicates a specific goal, immediately offer content or features related to that goal.

For example, Balance found that if a user selected “Sleep” as a goal, asking them if they were ready to sleep now allowed for better personalization of recommendations. This makes the app feel immediately relevant and valuable.

Build Intent and Excitement with Positive Emotional Nudges: Long onboarding flows can be highly effective for products that have an emotional connection. Use this time to build excitement and commitment.

Here are some onboarding tests:

Strategically Place Account Creation: While early account creation can cause drop-off, it can also lead to higher-intent users. If you’re confident in your ability to convert users through follow-up email campaigns, collecting an email early might be valuable.

Leverage Lifecycle Marketing for Activation

Your lifecycle marketing channels (email, push notifications, in-app messages) are powerful tools to guide users to their “Aha Moment” if they haven’t reached it organically.

Drive Core Product Actions: Identify the key action that signifies a user has experienced the “Aha Moment” and use your lifecycle campaigns to push users towards it. MyFitnessPal, for instance, knows that tracking food is the most important first step for new users, so their early messages are all geared towards encouraging that action.

Implement welcome and early re-engagement notifications: The first 24 hours to a few days after installation are crucial, as the likelihood of a user activating is at its highest.

Use Gamification and Engagement Loops

Making the activation process engaging and rewarding can significantly boost completion rates.

Incorporate Gamification Elements: Introduce features that make the initial experience more interactive and rewarding.

Imprint, for example, uses gamification by allowing users to gain XP for completing lessons and encouraging daily streaks.

We know Duolingo is the king of gamification with streaks and notifications

Design Engagement Loops: Create systems that encourage users to return to the app.

Finch successfully uses engagement loops by having a self-care pet that goes on a journey and rewards users for checking back into the app at specific times. This incentivizes consistent engagement and helps build a habit.

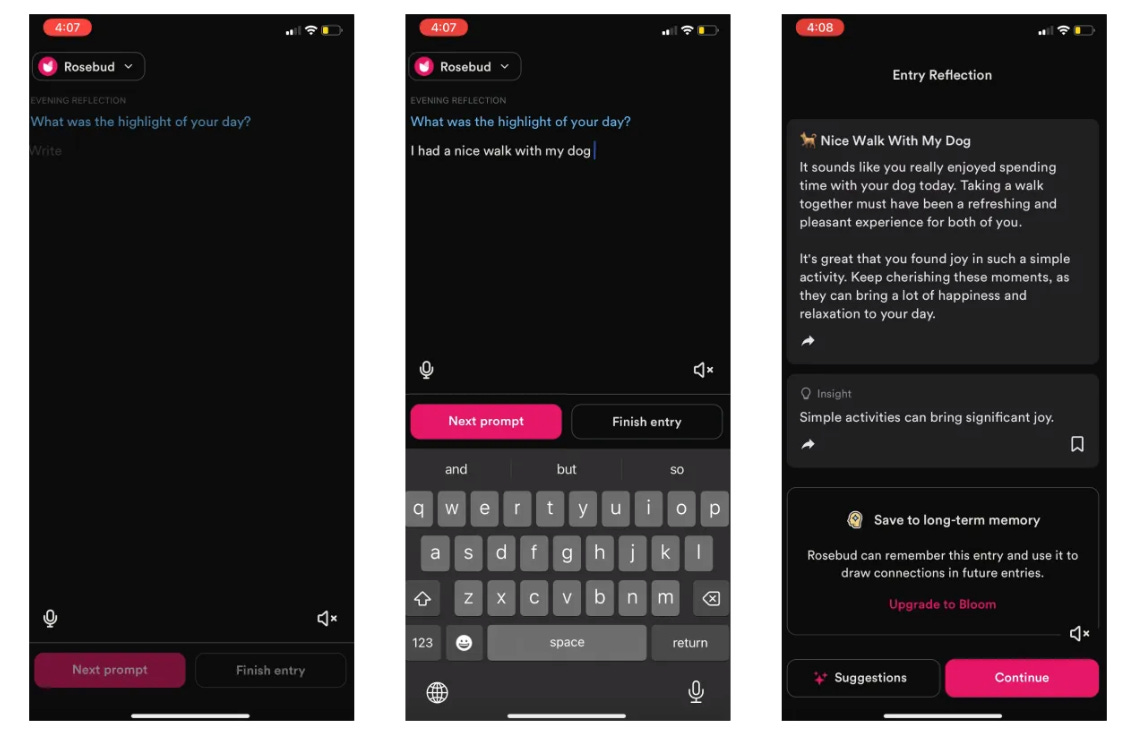

Move the Aha! moment into onboarding (before the paywall)

I wrote about this app Rosebud a while ago. It’s an AI journaling app.

I thought they did a great job of getting new users to experience the value before showing a paywall. You’re able to complete a full journal entry with their AI suggestions.

It may be tough for every app to truly bring their real app experience before the paywall, and it may not be in your best interest, but you should be thinking about how to get users to experience value faster.

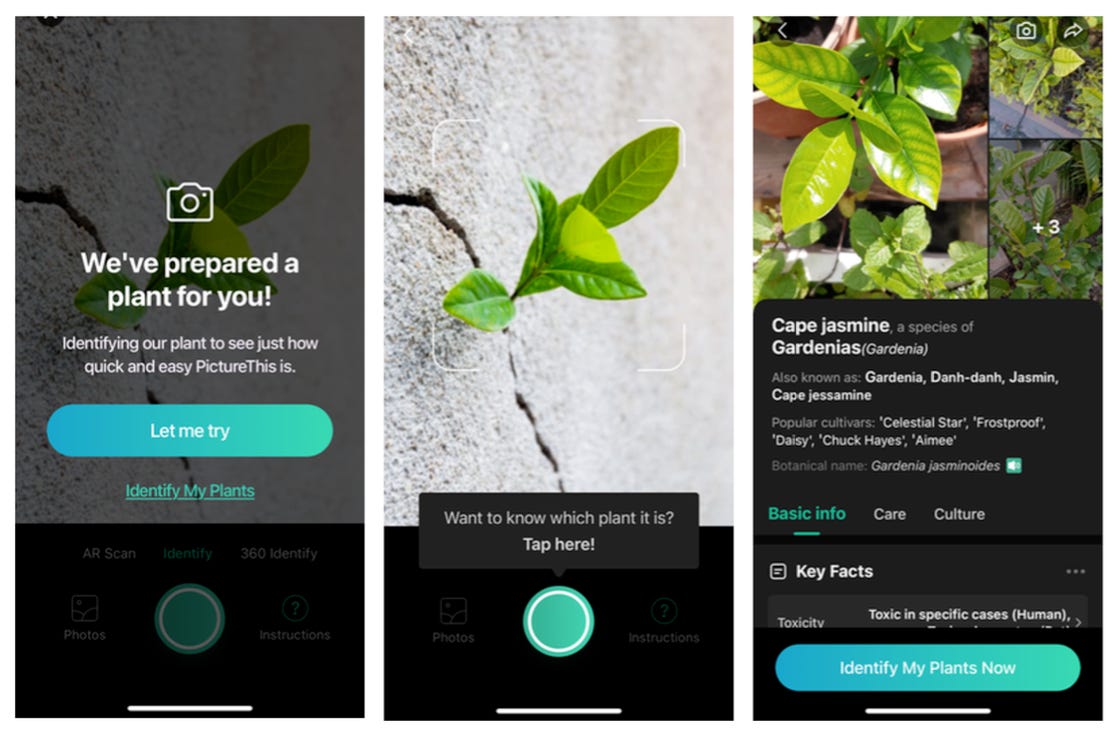

For a plant scanner app, you can show the functionality with a sample “plant scan”

Time to get to work!

You know what activation metrics and Aha moments are, you know the path to get there, and you even have some ideas for how to improve these in your own app.

I can’t do all the work for you.

Remember, creating a strong and engaging new user experience is hard work. If it was easy, everyone would have a great product with high retention.

But this is your opportunity! Most people won’t put in the work.

The more time you invest here, the better you’ll understand your users, you’ll build a more engaging product, and start to build a competitive advantage.

📣 Want to help support and spread the word?

Go to my LinkedIn here and like, comment, or share my posts.

OR

Share this newsletter by clicking here.

Retention.Blog is sponsored by Botsi:

For the last year and a half, I’ve been working on building a company.

That company is Botsi. We power AI Pricing for your subscription app.

How does AI Pricing Work?

First, you’ll tell us what price points and paywalls you have

Second, you’ll send us some contextual and behavioral data on new users

Third, we’ll send you back the right paywall to show each user (in real-time)

Schedule a demo at Botsi.com →

P.S. When you schedule a demo and mention Retention.Blog you’ll get 20% off.

this is pure gold - thanks Jacob!