Does it need to be a test?

Trust yourself

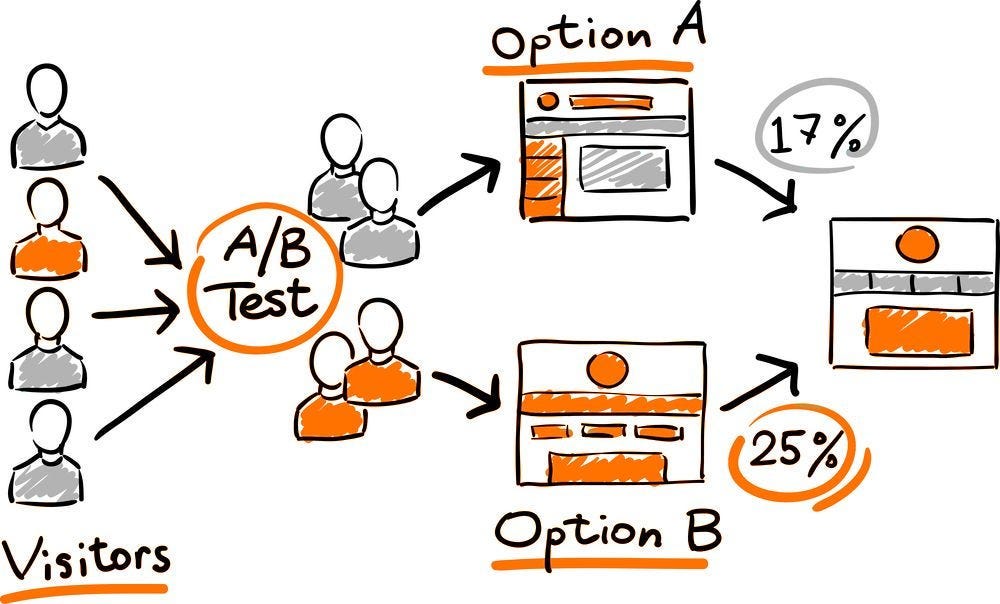

I love A/B testing

I love the process of figuring out what the different variants should be.

I love brainstorming and prioritizing the different tests.

I love calculating how long we should run the test to reach stat sig and figuring out if we can do a multi-variate test

Image from ideafoster

I enjoy it so much that I wrote a post on experiment design and setup here.

But…

When building and improving a product, A/B testing can turn into a crutch.

A/B testing isn’t free.

When you decide on a test to run, you’re saying this is more important than these 4 other things, and we’re going to wait 1 week to 1 month before deciding whether to launch this or not (and we still might not be sure).

There is also the code maintenance of setting up the test, making sure the variants are built and the audience split correctly, and the code cleanup after the test.

Opportunity cost

Time cost

Analytics cost

Engineering maintenance, setup, and cleanup

And then there is the relinquishing of responsibility.

Instead of, “I’ve put my heart and soul into this new product feature and I know it’s a better experience for my users”, you are saying, “I think this is better, but not super confident, so instead of making the feature great, I’m going to just test it.”

If you never had the luxury of being able to A/B test a feature, would you approach building and launching differently?

So when does A/B testing make sense and when does just shipping make sense?

Is it a small change?

Have you done any user research?

Have you seen any other products do this same thing?

Do you have accurate measurement tools in place?

Do you have a large volume of new or existing users?

Do you have existing A/B tests running?

Does it improve the user experience?

Have customers been asking for it?

If it’s a small change, ship it

Don’t waste time. If it’s small, it’s likely you won’t notice stat sig changes with A/B testing anyway

User research

Like Nike: Just do it.

When you run an A/B test, you often don’t understand the user psychology behind the winning or losing variant.

Send surveys, talk to users, do usability tests, and learn how people feel about your product!

Most of the time you could have done a few interviews or usability tests and figured out right away if that new feature was going to be successful or not.

Copying other products

Copying other products is an amazing source of ideas. Half my newsletters are recommending tactics other people use that you can too.

Ask yourself: Is this a tactic a direct competitor is using in the exact same use case? Might not need to test it.

Is it a tactic from a different industry? I would be much less confident here. Try to do some user research to figure out if people actually want it.

Accurate measurement

Making sure you have the right measurement and events in place to understand the impact of your work is a full-time job.

You likely don’t have 100% perfect measurement. No one really does.

How confident are you in your data? If you’re not 80%+ confident then what are you doing running a test?

What does your user volume look like

(I should have put this one first on the list)

If you don’t have a large volume of users, lots of A/B testing will either dramatically slow you down or you’ll never reach conclusive results.

What’s the user volume threshold? You don’t have to guess, use a tool like CXL’s calculator here (select Pre-Test Analysis)

But you’ll probably overestimate the uplift you’ll see, and not run the test long enough. Or stop the test too soon getting an inaccurate read.

Quick guidance: If you don’t think you’ll get 5-10k users per variant, it’s going to be tough to reach stat sig. Ideally, you have 10X that volume.

High-volume A/B testing is really only possible once you have hundreds of thousands to millions of active users.

Do you have a bunch of A/B tests already running in this area?

Think carefully about whether this new test could conflict with existing experiments

And go back to “Is it a big or small change?” - if it’s small, ship it, if it’s big, you should probably wait anyway. Do some user research in the meantime to figure out if you need to test it

Does it materially improve the user experience?

If it does, then why are you testing it? Because you’re afraid you’re wrong? Dig into why you think you might be wrong.

If it doesn’t materially improve the user experience, is it the right thing to be working on?

Have customers been asking for it?

If yes, then what are you waiting for?? Ship it!

If they haven’t, why do you think it’s important? You should have some type of quantitative or qualitative research backing it up.

What other tools should you have at your disposal other than A/B tests?

No harm tests

Your goal with this type of test is not to find a winner, but to make sure you’re not impacting anything negatively.

With user experience changes, it’s common not to see any huge jump in your KPIs, so your goal is to make sure there isn’t any decrease

Phased roll-out

This can be similar to an A/B test, but is used more for risk mitigation

Start with rolling out to 10% of your audience, make sure there aren’t any bugs, and if everything looks good, slowly ramp up the release

Anything else I missed here?

When do I think that A/B tests are the right approach?

Changes to monetization.

Pricing, paywalls, packages, etc.

Monetization makes your business run, so the effort here is usually always worth it.

You don’t want to make monetization changes blindly.

It’s very hard to do accurate user research on how much someone is willing to pay. It’s never truly accurate until you’re actually asking them to pay.

Asking people to pay for your product is always a worse experience for the user, so how are you supposed to step into their shoes and figure out what’s right for them?

You can’t use your gut sense or intuition about what makes a good experience for pricing. You’ll either price too low or be too scared to make changes.

Is this advice for everyone?

No.

If you have millions of active users, then you can A/B test at scale much more efficiently. And you probably don’t need me to tell you that if you’re at a company of that size 🙂

All of this advice is aimed at small to medium-sized companies where there is a larger opportunity cost to any single A/B test and the math makes reaching stat. sig. on most tests much harder.

I love A/B tests as much as anybody, and early in my career, I defaulted to A/B testing everything.

But the more products and companies I work with, the more I realize when you have a hammer, everything looks like a nail.

Get some more tools in your toolbox.

📣 Want to help support and spread the word?

Go to my LinkedIn here and like, comment, or share my post.

OR

Share this newsletter by clicking here.